Introduction to Design-led AI - a design thinking approach to AI

It is hard not to be excited about the potential to integrate AI into your business. But today, 73% of companies get stuck with AI projects in the experimentation or POC phase. Why? People don’t trust AI—both stakeholders inside your organization and outside the company with end users. This lack of trust stops even great AI projects from succeeding.

That’s why we are proud to share Design-led AI, a process that we believe can help. By focusing on building trust and showing a clear return on investment (ROI), Design-Led AI helps businesses use AI in a way that works for everyone. This guide will explain how you can solve problems, build trust, and achieve your goals with Design-Led AI.

What Is Design-Led AI?

Design-Led AI is a way of creating AI systems that make humans the heroes, emphasizes trust and delivers an ROI. Instead of only thinking about the technology, Design-Led AI makes sure systems are easy to use, clear, and helpful. It balances innovation with what users need and want.

Why Big Companies Struggle with AI

Unlike startups, big companies have older systems, strict rules, and a lot of employees. This makes using AI harder. Also, leaders may be unsure about using AI if they don’t see clear benefits or feel it’s risky. We speak to leaders who consistently have concerns about sharing their proprietary data with big tech or the foundational model companies.

And users are confused at best with AI interfaces today.

The Trust Problem in AI

Mainstream enterprises can’t deal with:

- It’s hard to know where AI gets its answers from.

- When AI makes up something and presents it as fact

- Clumsy workflows

- Regulatory uncertainty

End users:

- Stare at a blank prompt and don’t know where to start

- People worry about privacy and how their data is used.

- Bias in AI decisions can make people lose trust.

Why Trust Matters

Without trust:

- Leaders inside the company don’t want to fund or expand AI projects.

- Users avoid AI tools because they’re afraid of mistakes, unfair decisions, or data misuse.

Building trust takes time and effort. Companies need to show they’re transparent, collaborative, and deliver good results.

Key differences when designing for AI

When you design software that has AI as a key part of it you need to understand that it is not the same as designing traditional software interfaces.

Unpredictable interfaces

AI is non-deterministic, which means that interfaces can change dynamically based on user’s needs and the data that exists.

Explainable AI

AI systems can be a black box, which means designers need to explain how decisions are made. When people understand why something is shown, they are more likely to trust it.

Evolution over time

AI systems learn, adapt and degrade based on user interactions and new data.

Feedback loops

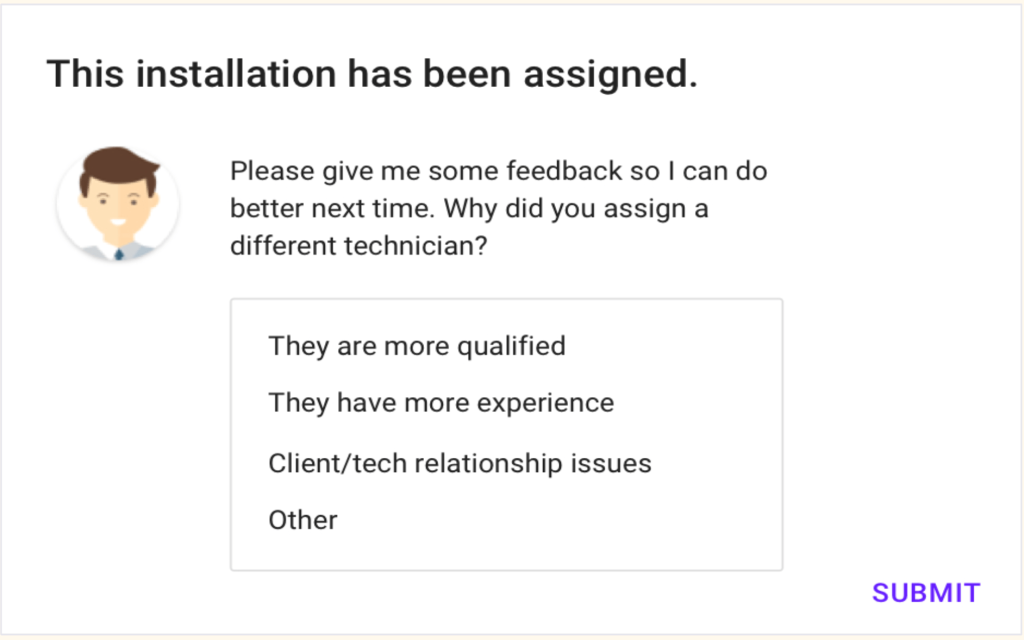

Feedback from users is key to making AI better. Designers should create interfaces that encourage user feedback.

Data & bias

AI relies heavily on data, meaning designers need to consider the data’s quality, quantity, and biases. And designers can create systems that help train the data to improve over time.

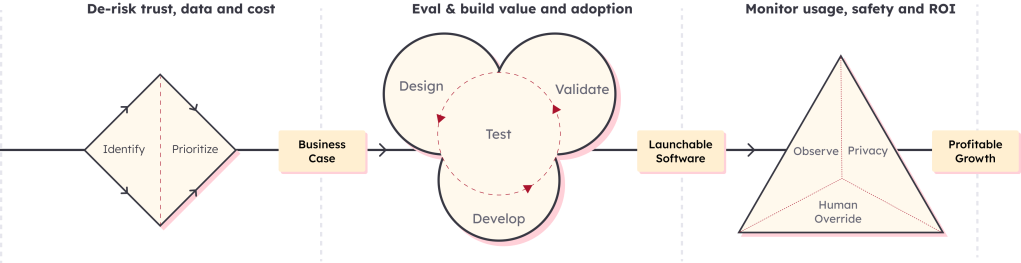

The Design-Led AI Process

AI solutions require a unique design process that focuses on ROI and trust

Design-led AI builds trust from the inside out so that both internal stakeholders and end-users feel confident in the technology.

It has three major components:

- Select the right Use Case to prove ROI and build momentum. This also de-risks trust, data concerns and costs

- Use specific design approaches for AI solutions. This process of eval & build value and adoption to launchable software

- Quant and qual testing to build trust & model confidence through ongoing observation and monitoring of ROI, safety and usage to ultimately deliver profitable growth

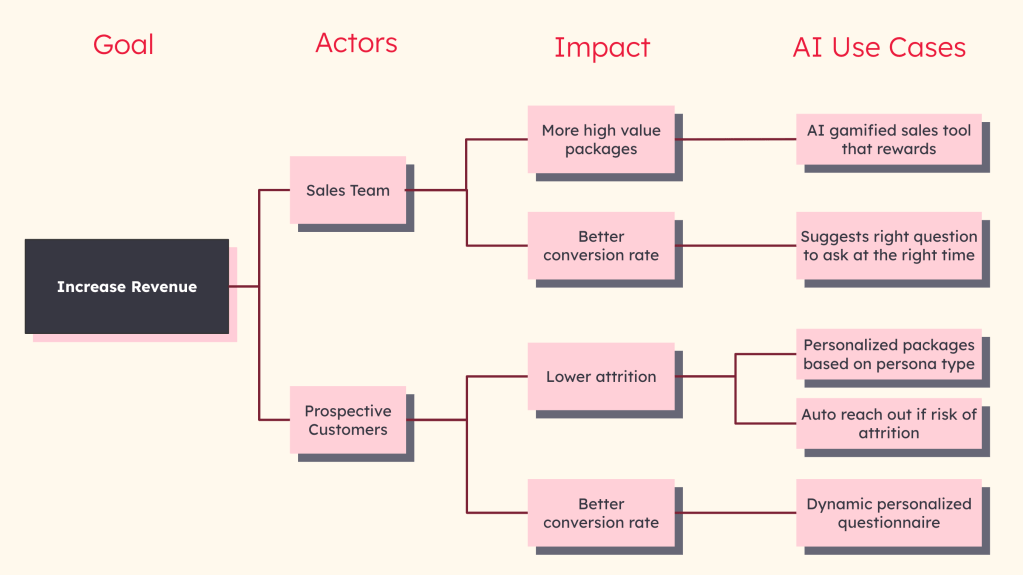

Find the right use cases

To get the best results, companies should focus on AI projects with the most impact. We have a more detailed blog post on how to identify the right AI use case with tools like our collaborative AI impact mapping workshop.

You can also identify promising AI use cases through traditional user research. For example, we worked with PwC and Google on a Field Services Operations platform and did onsite contextual inquiry with a gas station repair company. In less than a week of research, we identified that preparation for job assignments could be improved 10x through the use of AI. We found that return trips and customer satisfaction were most negatively affected when jobs were assigned without the correct information.

Prioritize AI use cases

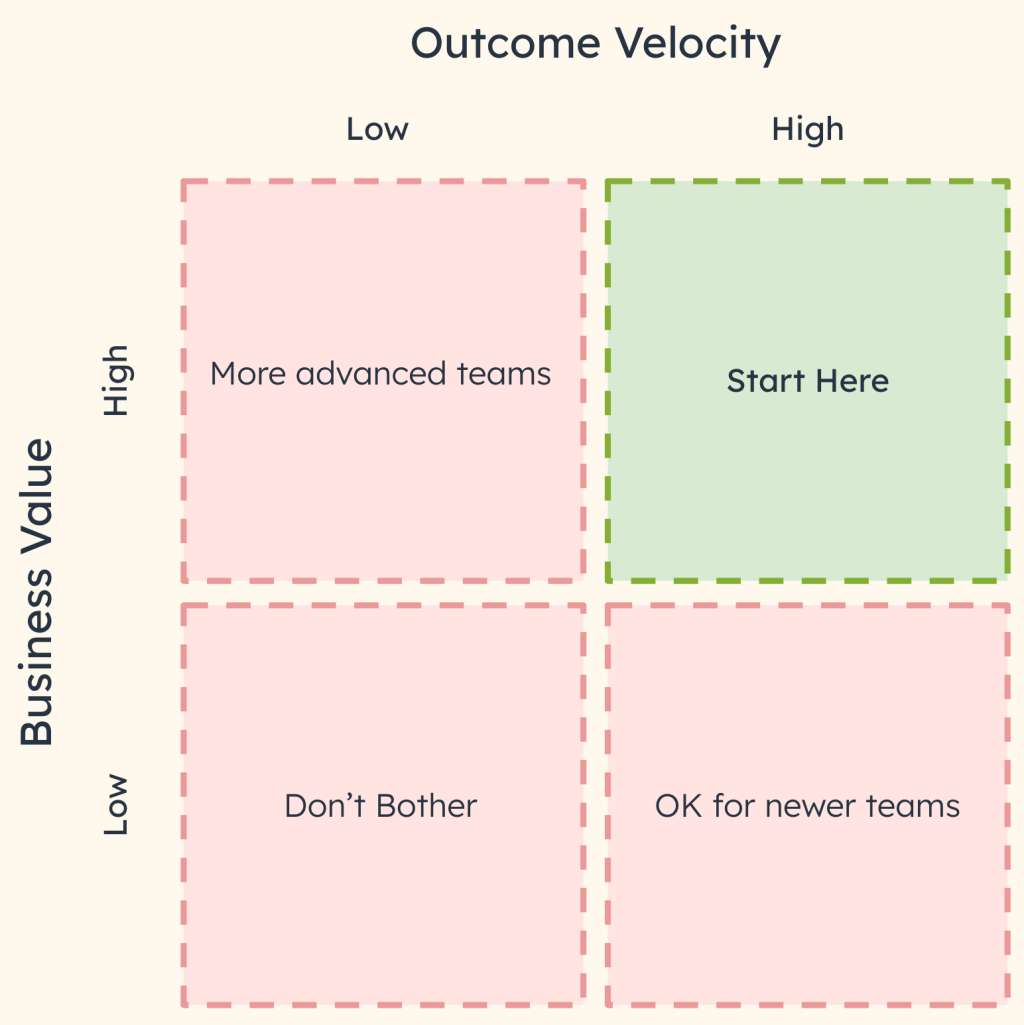

Once you have identified a number of AI use cases, you need to prioritize them based on where your organization lies on the AI readiness curve.

We recommend evaluating use cases on two quadrants:

- Business Value: How much can AI improve outcomes?

- Outcome Velocity: How ready is the team, and is the data good enough? This helps pick projects that are both valuable and doable.

When teams are earlier in their journey, we recommend finding quick wins to build momentum and show value.

If you need help prioritizing use cases, check out our AI project rater tool, which will help guide you through this prioritization process through a quick survey. Or we would be happy to help you coordinate feedback across your organization through collaborative workshops.

Build the business case

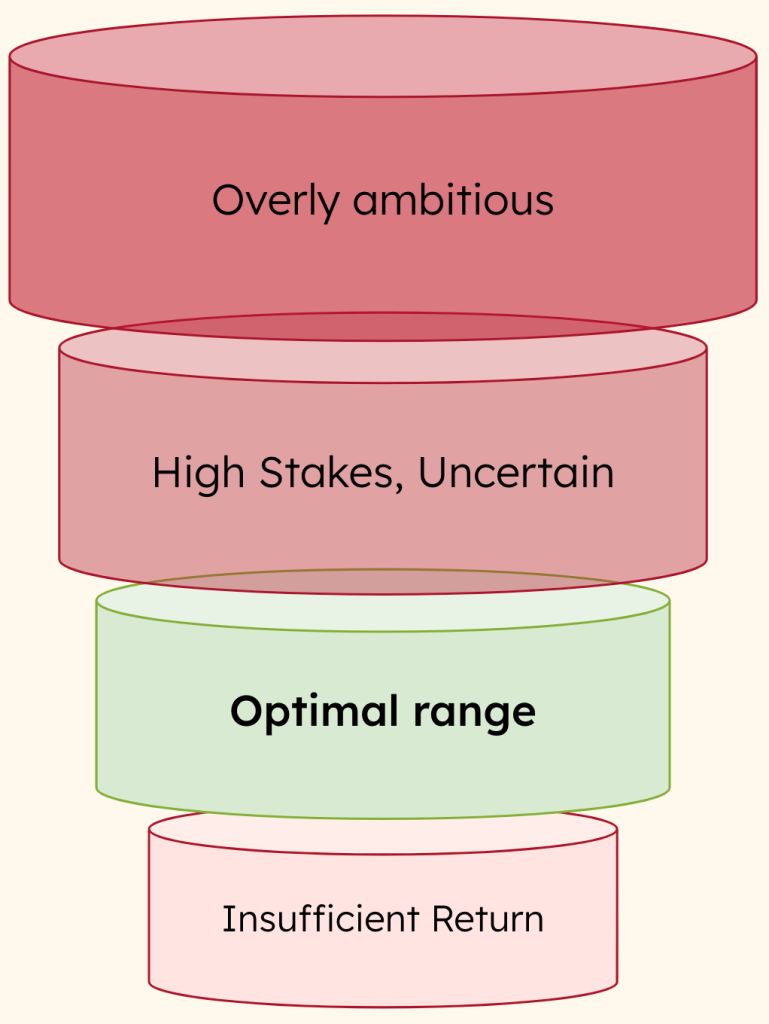

Once use cases have been prioritized, it is important to create business cases to get leadership buy-in. Many AI projects fail because they are not aligned to business strategy. They simply exist because someone thought it would be cool to have AI. This is not a recipe for long-term success.

We recommend “niching-down” on the use case you found to the optimal area of ROI. Too many people try too ambitious AI projects which sound great on paper, but are very difficult to execute and ultimately result in a project never getting out of POC mode.

Focus means it is easier to:

- Test the value of the idea and start showing an impact quickly

- Keep security risk minimized by not having too much data exposed to models

- Reduce risk of “AI Slop” in the early phases of experimentation

As part of your business case make sure to think about your Total Cost of Ownership (TCO) of the system as many AI projects can balloon in cost if not properly thought about. Learn more about our FinOps approach to build profitable AI projects.

Prepare for Trustworthy AI

You have built the business case and got approval from leadership on your AI initiative and approach. Before you begin coding and designing we recommend that you prepare your project for a trustworthy future. Processes and workshops that we encourage all our clients to do include:

Empathy Mapping for the Machine

This workshop, which is a twist on traditional use Empathy Mapping helps us understand what needs to train the model, what the model needs to show the humans and what information could be recommended

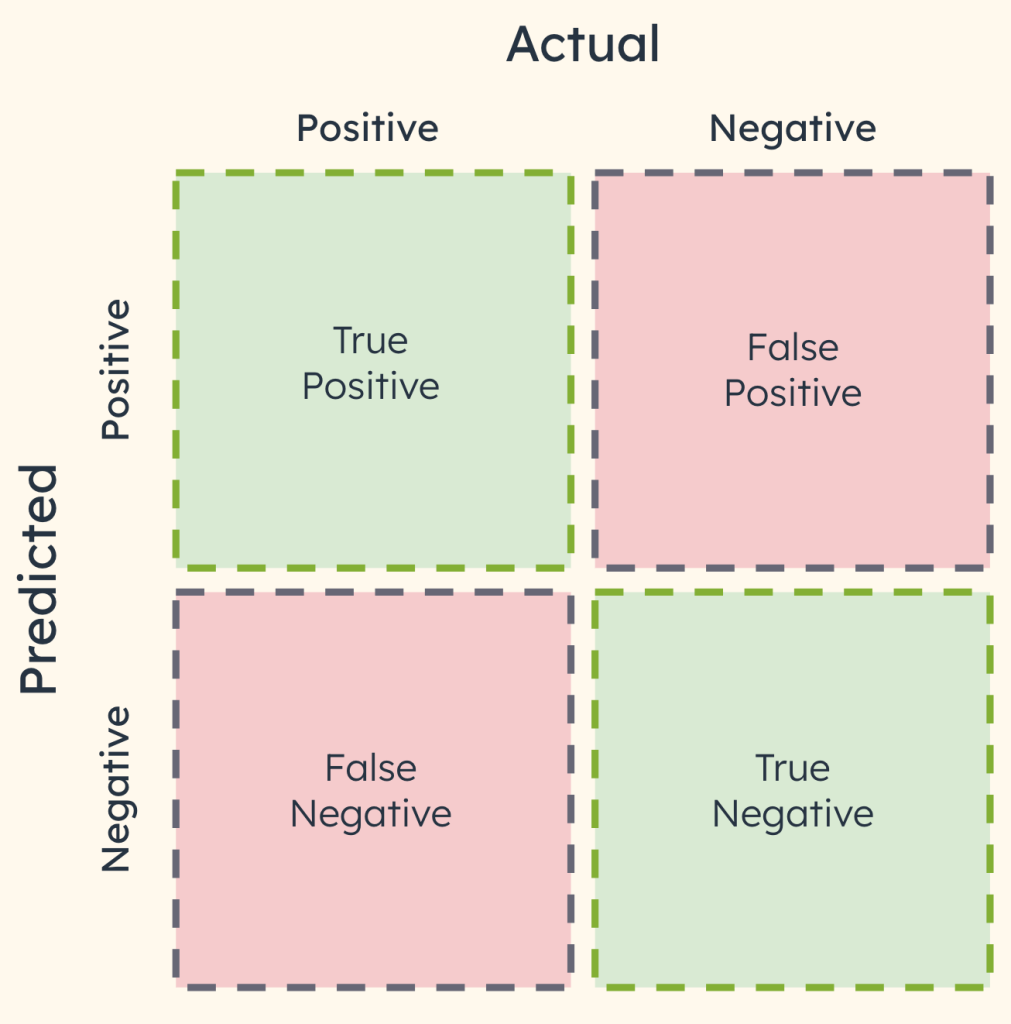

Confusion Mapping for the Machine

Before building a solution we understand what can go wrong. This allows us to understand the risks and tradeoffs. In non-deterministic systems, it is important to recognize that results will never be perfect. Sometimes, even the most accurate models are not the best for the business. Fine tuning a model to the nth degree is not a high ROI activity.

AI-based Service Mapping

Building on traditional service blueprint mapping, we have some unique additions to AI-first projects. We map out the end to end journey and include areas that:

- Need info from human

- Machine makes decision

- Human can intervene

- Are high risk areas

Build your data set

AI projects are only as good as their data. The end-to-end data engineering pipeline from collection to processing to deployment is important and covered in more depth in another post. But there are specific actions you can take in the design and prototyping phase to help set your project up for success.

We recommend to:

- Invest early in good data practices

- Expect & embrace messy data

- Data labelers need well designed tools and workflows

- Actively maintain your dataset – develop a plan early on

- Bring in domain & business experts early to analyze data

- Learn from data differences

Prototype

While engineers begin technical spikes and building the software, designers should quickly prototype design interfaces that can be used to test for trust.

When prototyping AI projects you need to think specifically about what makes an AI project different.

- We use specific design patterns that build explainability and trust

- We explain to user (simply) how AI is making decisions

- We design easy controls for humans to override and improve the AI

Other AI design patterns

Beyond these we recommend a few other best practices for AI products:

- People care about the benefit, not the tech

- People trust what they understand

- Slow down the process

- Determine how to show model confidence, if at all

- Set realistic expectations

- Don’t be afraid to share more information than you think

- Clear & transparent language around data and privacy

- Use familiar patterns

- Expect errors and design for them

- Let people experiment without screwing things up

Make humans the heroes

While AI promises to automate away jobs the reality is that for now humans still need to be in the drivers seat. AI will make your team much more efficient and open up many possibilities, but you should not think of AI as a simple drop and replacement for human work or intervention. That will be a great way to end up with a huge egg on your face. Just ask Air Canada after they had to pay damages when their AI assistant gave wrong information to someone who was flying for a funeral or Amazon for when their AI recruiting tool recommended only men.

AI projects that emphasize humans and give them the ability to check and override AI decisions will be the big winners. We have found this in many of our AI projects to date such as helping Royal Caribbean get people on to cruise ships using facial recognition, but still giving crew members the ability to support if something went wrong or was uncertain.

Using the information from your AI-based service map you can find areas to design experiences that will allow:

- Humans to review AI work and approve or change

- Humans to be alerted if the machine is uncertain

- Humans to review particularly high risk areas

- Humans to provide feedback to the system when they change something to further train or improve models

Make sure that all your human feedback is fed back into the MLOps pipeline for continuous model improvement

Qualitative and quantitative tests for trust

We use specialized techniques for quantitative and qualitative feedback to make sure that our generated results are accurate and trusted by end users. Thai is an important process that can be done continuously and should not be treated as a one-off exercise. We often test our designs before they are fully developed to make sure we are getting the right balance.

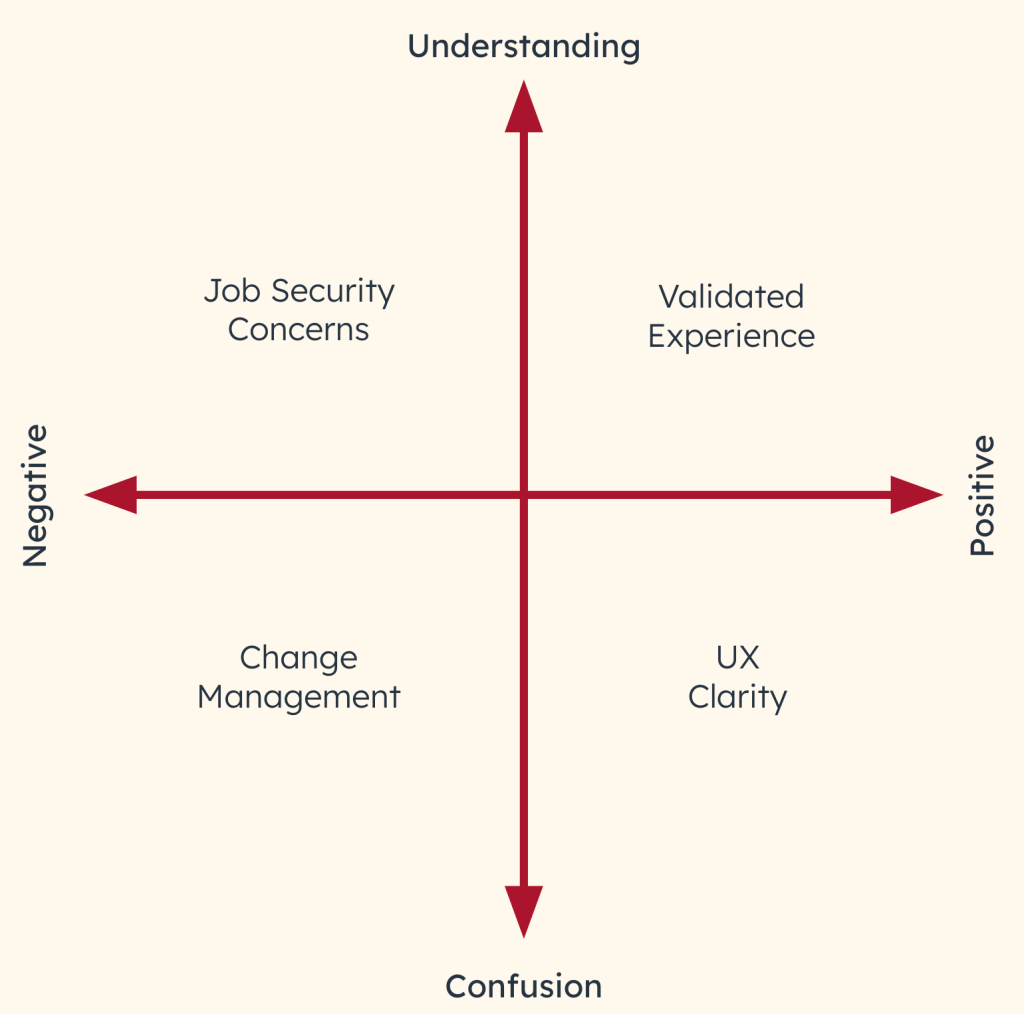

Qualitative User Interviews

We interview users 1-1 for rich qualitative insights. In these specialized interviews, we look to learn about our users’ understanding of AI decisions as well as their perception which can indicate design improvements or the need for change management. Learn more about user testing AI experiences for trust.

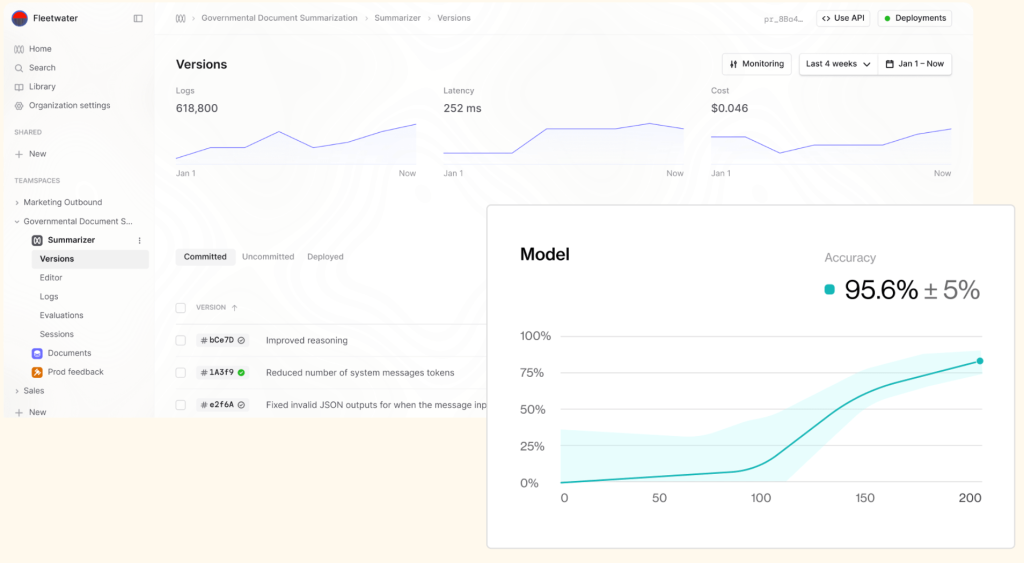

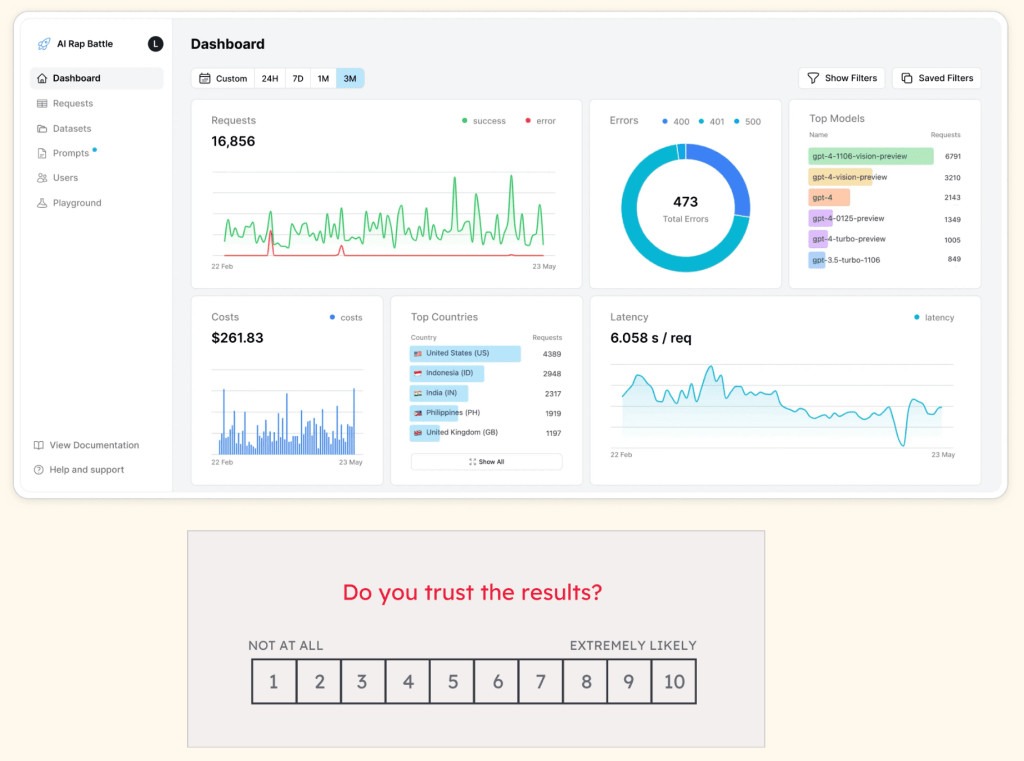

Quantitative Evals

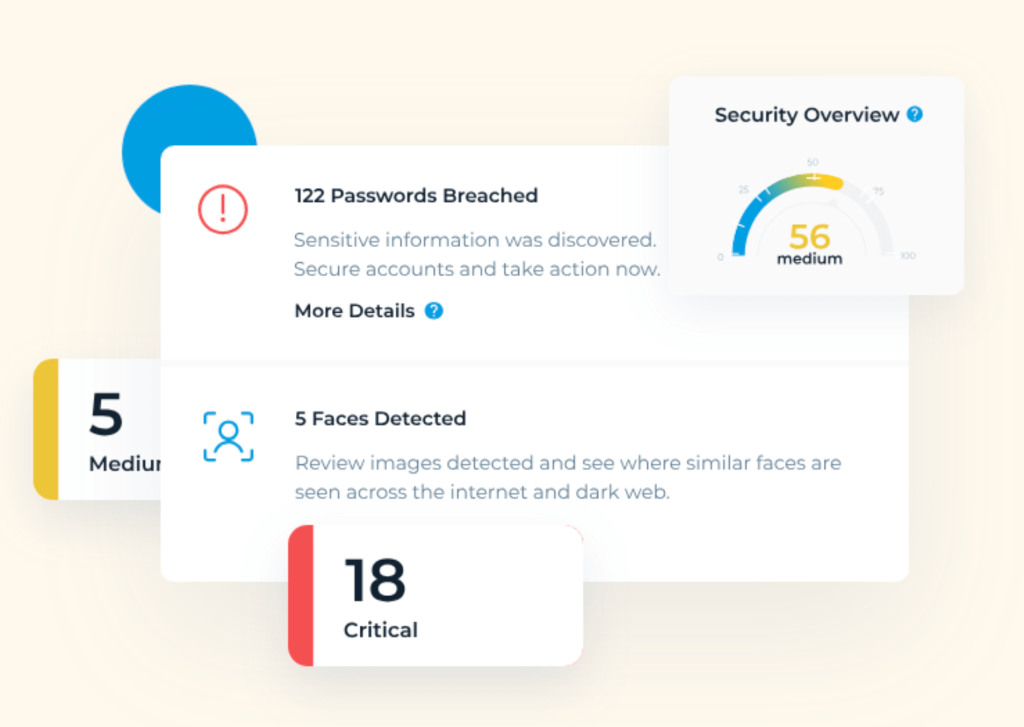

LLM evaluation tools provide quantitative insights into model performance before we launch, helping us make sure we limit hallucinations and errors. We have built custom dashboards and tooling that we can integrate into your project to speed this process up.

Guardrails for privacy and security

At this point you should have software that is launchable. You should have a high degree of confidence that your models will work and that your users will trust what you are going to release to them.

Of course, if you release something that ultimately results in a security breach, no trust of an interface is going to matter. People will instantly think of you poorly and your reputation may never recover.

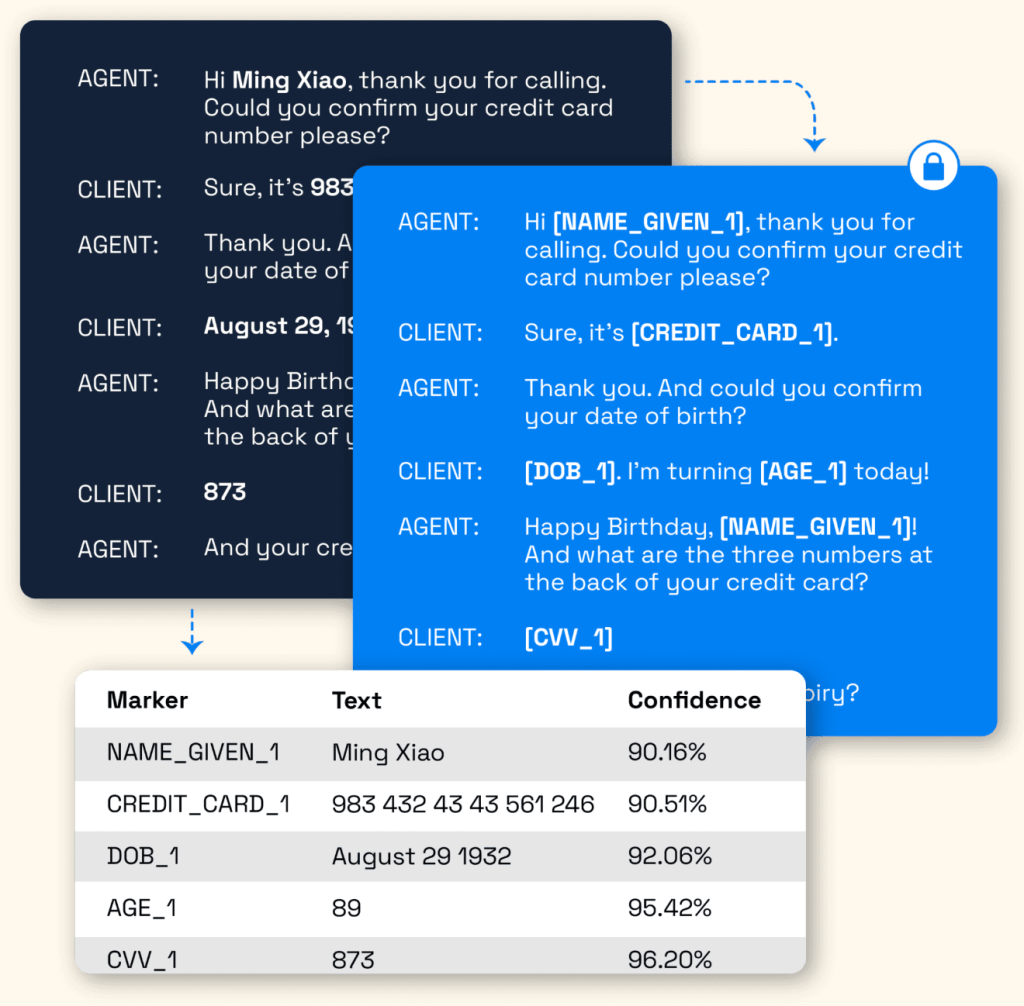

De-identification of PII

We recommend obfuscating user data before sending it to models. You can do this yourself or work with real-time and audited providers such as Private AI or Data Sentinel.

User Clarity

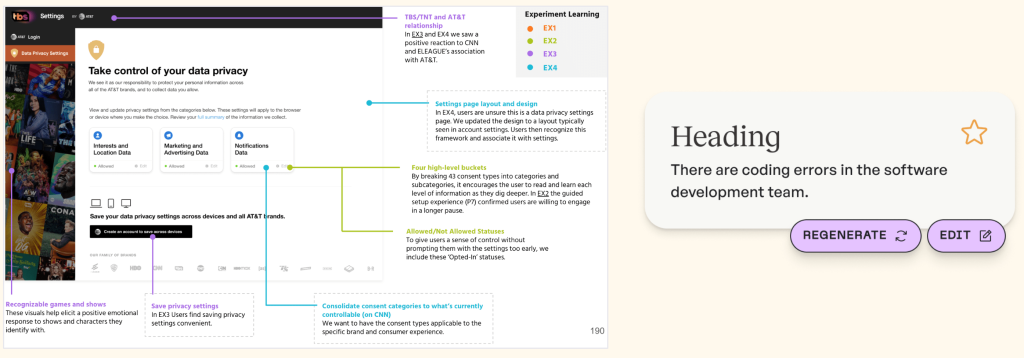

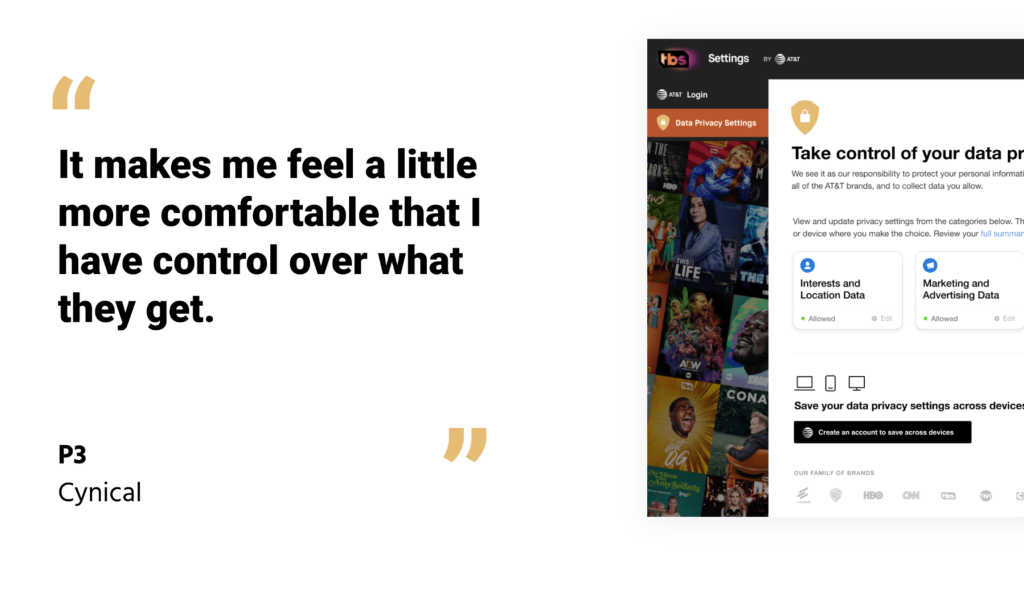

Before launching, make a final pass with a focus on UX copy. Be clear with the user how the data is being used and stored. This will help you build trust with them. Make sure it isn’t just lip service – you need to do what you say! We did extensive work with WarnerMedia around data privacy and found that people wanted to know they could control what data they were sharing in simple and use to use language. Being too verbose or using too much technical jargon resulted in less trust and a negative brand perception..

Security testing

Run security checks and best practices to make sure there are no potential leaks. In addition to testing, we recommend end-to-end security patterns:

- Implement a security model that assumes no actor, inside or outside the network can be trusted.

- Encrypt data both in transit and at rest to prevent misuse.

- Use automated dataset experiments with tools like LangSmith to test and validate AI responses for accuracy, safety, and security.

- Set up continuous monitoring tools to track system activity, detect anomalies (e.g., unusual API usage, potential injection attacks), and trigger alerts for action

Pilot launch & observability

You are cooking now! You have software. The guardrails are in place. It is now time to slowly start seeing the ROI.

We always recommend beginning with a small-scale pilot. This allows us to test the AI system in a controlled environment, which minimizes risk while validating real-world performance.

Set up a management platform (e.g. LangSmith, Haystack, etc.), to enable all users—technical and non-technical—to view every LLM chain invoked on a per-trace basis, including sub-calls, for detailed monitoring of performance, costs, and behavior.

Set up a management platform with aggregation dashboards for tracking and optimizing spending, performance, and system-wide metrics across the LLM application(s).

Incorporate human-guided datasets and experiments within our management platform to refine AI behavior, integrating insights from human overrides and experimental results to drive continuous improvement.

Plus, we like to survey users inline for trust, which helps us gather real, on-the-fly insights if our initial trust building work panned out as expected.

Celebrate success and governance

AI is new to most teams and organizations. And for many companies there is an inherent distrust of these systems. Creating a culture of trust around AI means sharing progress and wins with the team:

- Show how AI projects are helping the business.

- Share success stories to build excitement and support for future projects.

Design-Led AI helps companies build trust and achieve success with AI. By focusing on transparency, user feedback, and good data, businesses can overcome challenges and make AI work for them. With trust as the foundation, AI can drive growth and deliver long-term value.